For normal colour image working I only tend

to use LAB colour mode for colour correction or to bring out some ‘colour pop’. Like many, most of the time, I work

in RGB colour mode . For instance in Lightroom this is the only colour space

you can work in, whilst in Photoshop you can work in different colour spaces,

eg: RGB, the normal/default colour mode; and CMYK mode, which is usually used for

professional printing.

When you convert your image to

Lab Colour mode, the colour Channels change to show ‘Lightness’, ‘a’ and

‘b’. Lightness is like a black-and-white version of the image, while ‘a’ and

‘b’ represent all the colours, including colours that ‘don’t exist’!

|

| LAB Mode Colour Space |

Crucially, this means that you can enhance

colour and detail independently of one another, producing a vibrancy of colour

that wouldn’t be possible in RGB mode.

Why is this important?

As mentioned above, in LAB mode colour

casts are relatively easy to correct. Also, if your image is rather ‘flat’ in colour

space, LAB processing will help separate out the limited colours that are

present.

If you are processing for digital

Infra-Red: you will have both of these issues to correct: a large red colour

cast, that can’t readily be corrected via the temperature sliders, and, once

the red colour cast is eliminated, you will have a very narrow colour range, especially

if your IR conversion is 720nm of longer.

It is worth mentioning up front, that if

you desire to carry out monochromic processing, ie eliminate colour from the

image, then what follows is not that important. Having said that, if you

are using, say, Silver Efex Pro II to carry out your B&W conversion, or

Lightroom come to that, you may still wish to colour correct and/or channel

swap your image.

In this post I’ll illustrate a very basic IR, LAB-based processing workflow, using the following image from my 720nm converted, 50D.

Also, to further illustrate the power of a LAB-based workflow, I won’t bother

to undertake any camera calibration, ie I’ll just work on the IR RAW image in

Photoshop. Note that the exposure was set for this capture using Magic Lantern

Auto-ETTR, hence the exposure is skewed to the right, but there is no overexposed

highlights. The ETTR capture ensures we have the ‘best’ available tonal density

to play around with.

|

| RAW, as captured. image |

Once the image is in Photoshop, the first

thing to do is to move from RGB (the default) to LAB colour mode. This is

achieved under the Image>Mode menu. Nothing will change in the image.

My first correction step is to carry out an

a/b ‘channel swap’, as I wish to drive my sky to be blue: I tend to use the sky

as my reality-touchstone when processing colour IR images. As there is no channel swap available in LAB

mode, the easiest way to achieve this is via a curves adjustment layer.

Once the curve adjustment layer is in

place, simply invert the a and b channel curves by moving the left hand extreme to the

top and the right hand extreme to the bottom. The linear curve should now be slopping down

from left to right, rather that up from left to right.

The image will look horrible, because of

the inverted red cast. The next step is to correct this.

Basic colour correction can be achieved by

moving the black and white points on the base curve in, towards, the middle.

You will need to play around with both a and b channels. Also, because you are

in LAB mode, you can be aggressive with your slider movements, but try and

remain reasonably symmetrical, else you will introduce additional colour casts.

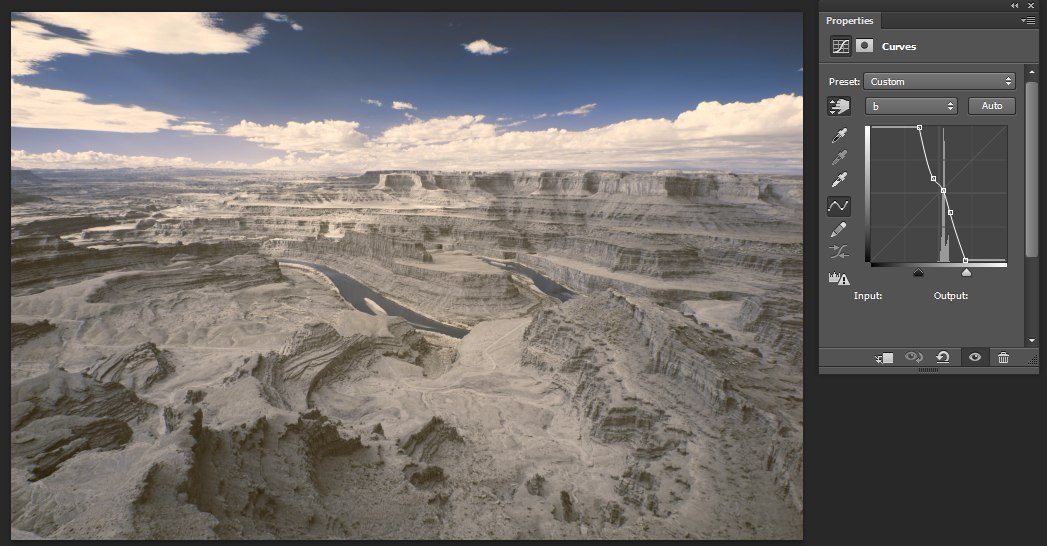

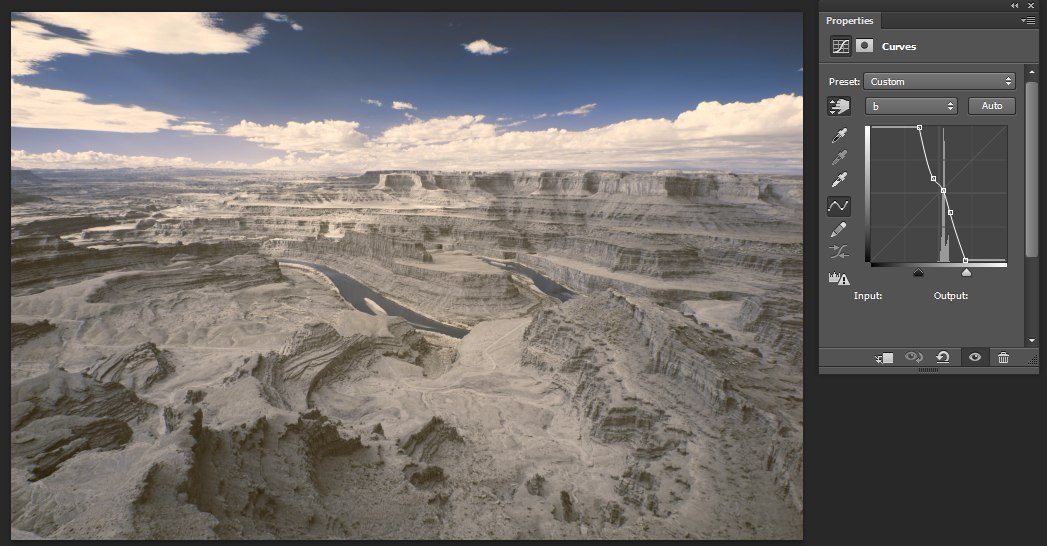

Further curve corrections can be carried

out by shaping the curve. Unlike an RGB curve, a LAB curve can be ‘insulted’ a

lot, before you have ‘problems’. The following shows the base corrections,

achieved with a single LAB curves adjustment layer, showing the b channel

adjustment curve.

|

| Basic colour correction using LAB mode |

This is step one in the post processing, ie

colour correction and basic colour ‘creation’. Additional processing will be

required to make a final image. We will discuss subsequent processing steps in future posts, eg making use of luminosity masks etc.

Bottom line: if you wish to create colour

(sic) IR imagery, you will be well served by learning about a LAB-based

processing workflow. In future posts I will explore LAB processing in more

detail. However, I hope this basic introduction has helped convince those

die-hard RGB post-processors, that there is more to life in LAB colour space!